Hello friends. From time to time here on Linkarati we cover news, trends, or presentations we believe worth sharing and amplifying.

Today I stumbled across a compelling tweet from Rae Hoffman:

if you saw this https://t.co/JN9T1IOBjc & didn't spend time watching it & doing this, good luck in your SEO career pic.twitter.com/YliYKcSFdq

— Rae Hoffman (@sugarrae) March 31, 2016

The link leads to SMX's video of Paul Haahr, Software Engineer at Google for 14 years, who is giving a presentation at SMX West 2016 about how Google works, from his perspective as a Google Ranking Engineer.

As Danny Sullivan—who introduces Paul—says, Paul's title doesn't reflect that he's part of the senior leadership from Google's ranking team.

As an SEO, this should make your heart skip a beat.

Danny reiterates how lucky we (the SEO community) are to have Paul present. And as you can see from her tweet, Rae believes strongly this is something every SEO needs to watch in depth, as well as take notes. Who am I to argue?

I’ve often found that writing coverage of a presentation teaches me even more than taking notes, since I have to take it a step further and write notes others can understand and appreciate. It adds yet another layer of critical thinking.

I decided to do just that, to both better understand the material myself and to help you digest the presentation faster. Win-win, right? Note: Rae has her own notes and coverage here.

I recommend you follow along with the video, which will be embedded throughout. Below I've also embedded Paul's presentation from Slideshare, which I will clip and post still images from to accompany my coverage.

Sit back, enjoy, and let me know what you think.

Let’s get into it.

Note: Emanuele Vaccari translated this post into Italian, along with some of his own thoughts. You can see his translated post here.

What A Google Ranking Engineer Does

I'm actually going to break one of my own rules for coverage right off the bat and ignore chronological order (but only for now — I'll go through the presentation chronologically, after this section).

Paul had a theme embedded throughout his presentation which served as the backbone of his presentation, in my opinion. That theme is "what do ranking engineers do?".

There were four versions of this answer, according to Paul, each slightly refining the role of ranking engineer:

- Write code for those [Google's search] servers. Source: Slide 16

- Look for new signals. Combine old signals in new ways. Source: Slide 19

- Optimize for our metrics [relevance/searcher intent and quality]. Source: Slide 24

- Move results with good ratings [from live experiments and human raters] up. Move results with bad ratings down. Source: Slide 55

Please note that brackets [ ] indicate my own interjections to clarify (imperfectly) Paul's slides.

So what does a Google Ranking Engineer do? The primary takeaway I received: make sure search is actually improving for users (the humans). And how do they do that? By making Google better match Search Quality Rating Guidelines.

A later tweet from Paul to Rae again emphasized the importance of the Search Quality Rating Guidelines, and that SEOs really should read the entire document:

.@sugarrae Glad you liked. For transparency, I think the rater guidelines were actually a big deal. Read them yet?https://t.co/llmUQJbkQ8

— Paul Haahr (@haahr) March 31, 2016

My absolute favorite quote from Paul's presentation came when he was talking about the Google's Search Quality Rating Guidelines at the 16:08 mark. The quote itself is from the 16:48 minute mark, during slide 32 in the presentation. Paul said:

"If you're wondering why Google is doing something, often the answer is to make it [search] look more like what the rater guidelines say."

Paul Haahr, Google Ranking Engineer, SMX West 2016

That's a powerful statement. Paul summarizes all the changes at Google as attempting to better match the Search Quality Rating Guidelines, which they've published! Long story short: you want to understand Google? Go read their quality guidelines.

Alright, let's jump into the chronological coverage of Paul's presentation.

Google Search Today

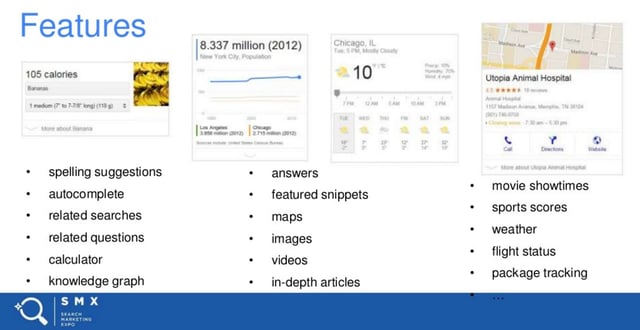

There are two themes in Google search today, according to Paul:

- Mobile First

- Features

Mobile is leading search queries, and Google is more and more thinking mobile first. This isn't new information for SEOs, but it's something Paul made a point to emphasize.

The second point Paul emphasized was the importance of features, particularly in mobile.

Slide Four

Interestingly, Paul said in summation of search today:

"We're going more and more into a world where search is being thought of as an assistant to all parts of your life."

Paul Haahr, Google Ranking Engineer, SMX West 2016

How Google Search Works

All of Google used to be 10 blue links. Paul broke down the problem of ranking in the 10 blue links era as "What documents do we show? What order do we show them in?"

Slide Seven

Interesting aside: Paul took a moment to clarify he wouldn't ever touch on the topic of ads. Specifically, Paul said:

"Ads are great, they make us a lot of money, they work very well for advertisers. But my job, we're explicitly told "don't think about the effect on ads, don't think about the effect on revenue - just think about helping the user."

Paul Haahr, Google Ranking Engineer, SMX West 2016

interesting to hear about the clear cut separation of church and state - excuse me, paid and organic.

Life of A Query

Paul's explanation of ranking starts with the life of a query, to explain how search works. There are two parts of a search engine:

- Ahead of time (before the query)

- Query processing.

Before the query:

- Crawl the web

- Analyze the crawl pages

- Extract links (the classic version of search)

- Render contents (Javascript, CSS - Paul emphasized the importance of this.)

- Annotate semantics

- Build an index

- Link the index of a book

- For each word, a list of pages it appears on

- Broken up into millions of pages

- These pages are called "shards"

- 1000s of shards for the web index

- Plus per-document metadata.

Source: slide 10 and 11.

Query Processing (when someone uses search):

- Query understanding and expansion

- Does the query name known entities?

- Are there useful synonyms?

- Context matters

- retrieval and scoring

- Send the query to all shards

- Each shard:

- Finds matching pages

- Computes a score for query+page

- Sends back the top N pages by score

- Combine all the top pages

- Sort by score

- Post-retrieval adjustment

- Host clustering (how many pages are from the same domain), sitelinks

- Is there too much duplication?

- Spam demotions, manual actions applied

Source: Slide 12, 13, 14, and 15.

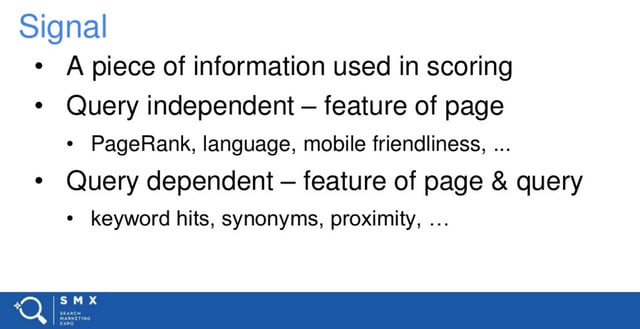

Google Scoring Signals

Paul refers to a single number that represents the match between a query and page.

This is based upon scoring signals, which are based upon two categories:

- Scoring signals based upon a page

- Scoring signals based upon the query.

Slide Eighteen

Here Paul cited version two of ranking engineer's jobs: look for new signals, or combine old signals in new ways. Paul described this as "hard and interesting".

Key Metrics in Ranking: Relevance, Quality, Time to Result

Paul emphasized relevance as a key metric in search results. Relevance was basically explained as "matching user intent".

Paul refers to relevance as "our top line metric" and "the big internal metric".

There are two others, as well: quality and time to result (faster being better). Within this presentation, relevance and quality were the focus.

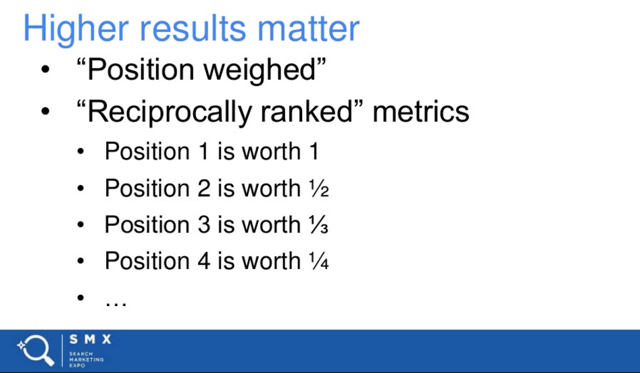

Reciprocal Rank Weighting

CTR (click through rates) are often discussed in SEO, particularly for search rankings. What's the value of being the top result in organic versus being number two? Versus being number four? This is often discussed and debated. Paul explained Google's point of view, in terms of value.

He defined the idea as "reciprocally ranked weighting":

Slide Twenty Three

To be clear, Paul wasn't discussing CTR. Rather, an internal metric that values entire search pages.

The idea represents a 50% degregation in value of each position, with number one being worth ten times more than ranking number ten.

And this brought Paul to version number three of what Google Ranking Engineers do: optimize for our metrics [relevance and quality].

Slide Twenty Four

How Does Google Rate Search Results?

Paul explains there are two ways Google analyzes the efficacy of specific results:

- Live experiments

- Human raters.

Video below:

Live Experiments

Google runs live A/B tests on real traffic, and then looks for changes in click patterns.

"We run a lot of experiments. It is very rare if you do a search on Google and you're not in at least one experiment."

Paul Haahr, Google Ranking Engineer, SMX West 2016

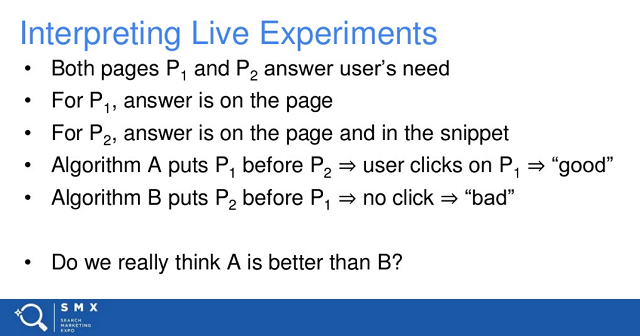

Paul takes a moment to explain that interpreting live experiments is a difficult task.

His primary example (below) is a result with an answer box. Traditionally, if the searcher clicked through to the site that would be seen as a good result. But what if the searcher saw the answer, were satisfied, and closed out? Traditionally that would be rated as a bad result, but in this case it might actually be a good result.

Slide Thirty

The point of Paul's example is to show how challenging analyzing searcher satisfaction is.

Human Rater Experiments at Google

The most important takeaway from this portion is that mobile-first is again emphasized here, with the majority of human rater experiments taking place on smart phones.

Human rater experiments work as follows:

- Show real people experimental search results

- Ask how good the results are (sliding rate for both relevance and quality)

- Aggregate ratings across raters

Again, it's extremely important to note that Google has published their Search Quality Evaluator Guidelines.

Seriously, watch that clip.

Examples of Search Quality Evaluator Rating

Paul starts going through examples and screenshots of search quality rating experiments at the 16:56 mark

I recommend watching the video for this part, as much of what Paul depends heavily on the slides. To follow along, this part of the presentation begins on slide 33.

Two Scales to Judge Results: Relevance and Quality

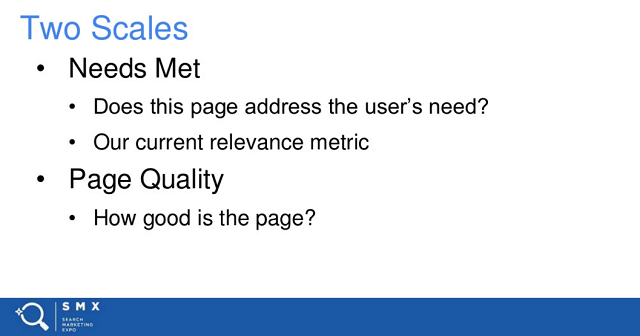

There are two scales Google provides to raters to judge the quality of results:

- Needs Met (relevance)

- Page Quality

Slide Thirty Five

Mobile-First Experiments

Paul also emphasizes mobile-first.

They accomplish this in five ways:

- All relevance instructions are about mobile user needs.

- Mobile quires are used twice as much in experiments.

- The user's location is included in the experiments.

- The tool displays a mobile user experience.

- Raters visit websites using their smart phones.

I can't stress how important this is. Google is clearly putting real emphasis on mobile; there's no middle ground here.

Needs Met Rating - Relevance Rating

There are five different categories of relevance, which is defined as "needs met":

- Fully Meets

- Highly Meets

- Moderately Meets

- Slightly Meets

- Fails to Meet.

It's important to note that raters do not judge with only five options: they're presented with a sliding scale which can land anywhere in between any of these ratings.

Starting on slide 41 Paul walks through examples of each rating:

Here's the accompanying video:

Important takeaways:

- "Fully meets" can only exist when the query is unambiguous and there is a result that can wholly satisfy whatever the user is intending with the query.

- "Highly meets" sometimes requires two specific, separate results in order to satisfy user intent.

- "Moderately meets" is generally good information.

- "Slightly meets" is acceptable but not great information, with hopefully better results to display.

- "Fails to meet" is laughable, with Paul citing search bugs returning bad results.

Page Quality Rating

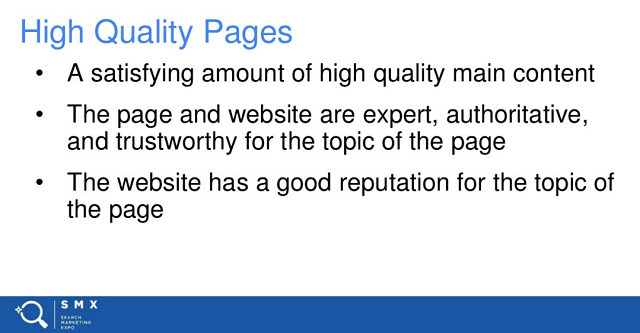

Google looks at three important concepts to describe the quality of a page:

- Expertise

- Authoritativeness

- Trustworthiness.

The scale of quality is from high to low.

High quality pages:

- Satisfying amount of high quality main content.

- Expertise, authority, and trust are clear.

- The website has a good reputation.

Slide Fifty

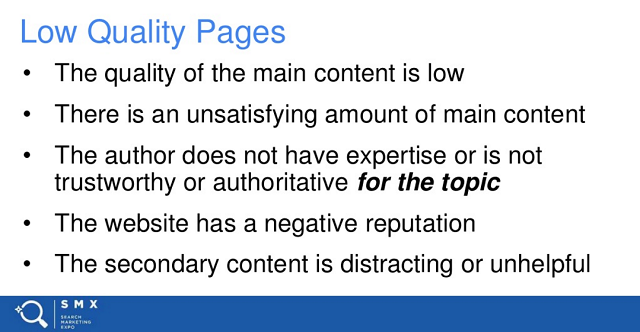

Low quality pages:

- The quality of the content is low

- There is not much main content

- No expertise or authority displayed

- The website has a negative reputation

- The secondary content (ads) are distracting.

Slide Fifty One

Optimizing Search Quality Metrics

A few hundred computer scientists work in rank engineering. They focus on metrics and signals, run constant experiments, make lots of changes — all to make Google's search results better (and more accurately reflect the Search Quality Evaluator Guidelines.

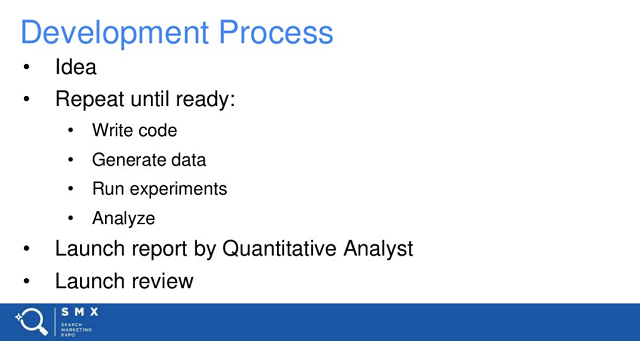

The development process is fairly standard to the software development process.

Slide Fifty Four

Important highlights:

- The process can take anywhere from weeks to month to test code.

- Quantitative Analysts (basically statisticians) review the data. They keep the ranking engineers honest, providing mostly unbiased analysis of the change.

- A launch review panel reviews a summary of the project, reviews documentation and reports, and debates the merits of the ranking change.

- To actually push the update live can be fast or slow, depending on how ready the code is for the algorithm.

Ranking engineers create these updates primarily to move results with good ratings up, and move results with bad ratings down.

What Goes Wrong in the Development Process

Paul talks about two kinds problems:

- Systematically bad ratings.

- Metrics that don't capture concepts they care about.

Systematically bad ratings

Paul uses the example of [texas farm fertilizer]. It turns out this is a brand of fertilizer, but Google was returning the manufacturer. It's unlikely people actually want a map to the manufacturer, but would rather see the actual product. However, human raters consistently rate this as a "highly meets needs" result.

This actually led to a pattern of adding more maps, which actually created highly rated results but actually poor live search experiences.

Missing Metrics

Paul cites the issue Google was having with quality in 2008-2011. Specifically, the issue with content farms.

Content farms can produce low quality, highly relevant content. This led to highly rated results which actually were low quality. Content farms are what led to Google implementing the second slider in their human rating experiments, judging the quality of results.

It's absolutely fascinating to hear Paul discuss the issue with content farms and the effect on search quality, and how implementing another metric to judge the efficacy of search results overcame the issue.

It's also fascinating there's no mention of Panda here, which is how Google algorithmically dealt with content farms.

The solution to missing metrics, according to Paul, is to fix rater guidelines or develop new metrics (when necessary).

Slide Sixty Seven

And that's the entirety of Paul's presentation!

Final Thoughts and Takeaways

Just a few final thoughts to wrap up this long post.

- I'm going to read the Search Quality Rater Guidelines sooner rather than later. You should too. Paul emphasized that all changes made in search rankings should reflect that document. Want to understand Google ranking? (as an SEO, the answer should be a resounding "YES!") Then read that document.

- Google truly is mobile-first. All human rater experiments heavily emphasize mobile-first experiences, to a surprising degree.

- Nearly every search includes a live experiment.

- Relevance is the number one metric at Google. They define "relevance" as meeting user (human) needs within search. If you want to rank higher, think about meeting searcher expectations and needs.

- Google places significantly more value on the first result. Each subsequent result degrades by 50% of the value of the preceding result. Result #1 is considering ten times more valuable than result #10.

I'm sure there are other important takeaways, but my brain is officially fried. Feedback is welcome — hope you enjoyed the coverage.